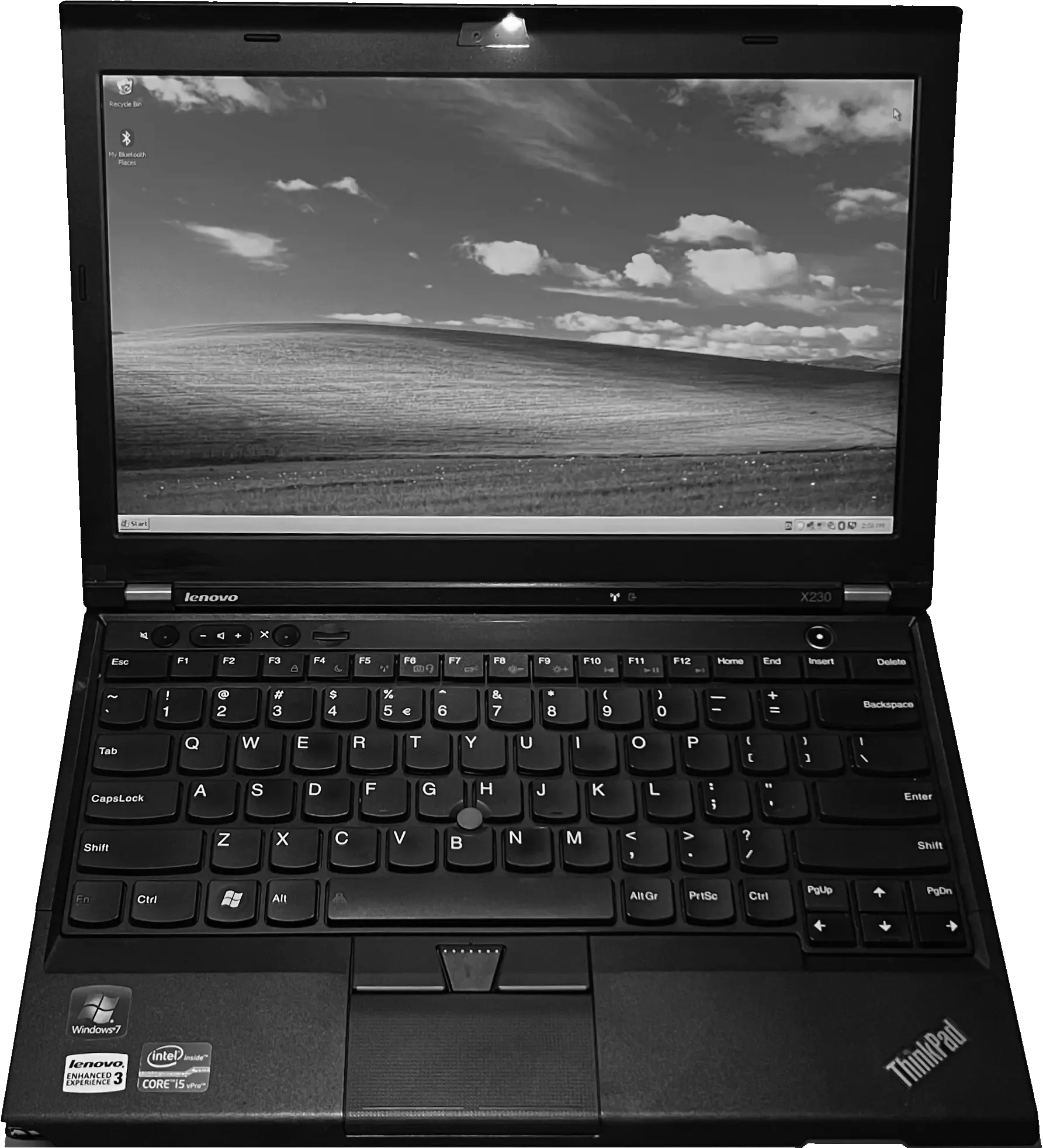

Old Laptop: Nostalgia Challenge

Mind wandering

For some time I had an idea of getting some old PC/laptop, the motivation being: play old games, revisit the operating system of my childhood, put the machine in order, mess with it as I please. Basically, the only specific requirement was the presence of a CPU from Ivy Bridge generation for a full support of Windows XP (yeah I am not that old so it makes me nostalgic). It didn’t have to have a powerful CPU with a lot of cores or a GPU, as games from 1990s-2000s are fairly fine to run with Intel Iris or whatever you pull.